Ever since I started doing TDD I've been looking around to find a solution for testing UI and Javascript which would enable me to develop UI having unittest-style feedback and integrate my UI testing in a Continuous Integration setup. A few months ago we tried using QUnit at work for testing clientside scripts which relies on 3rd party vendors (Google Maps) to provide us with data for our application. I always wanted to find time to try and mainstream our experiences a bit because we never got much past "let's-try-this-thing-out" and spend a few hours seeing what could and could not be accomplished.

So - I decided a few days ago to sit down and spend a few evenings setting up a Continuous Integration environment which would

- A) Run clientside tests in a Continuous Integration server

- B) Provide TDD-stylish feedback on both NUnittests and clientside tests

Disclaimer: If you don't have experience with Continuous Integration environments you might find this to go a bit over your head - I strongly recommend this article written my Martin Fowler and if you really want to dig deep into the subject "Continuous Integration - Improving Software Quality and Reducing Risk" is a must-read.

How it was done

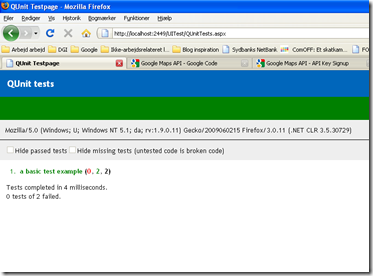

I decided to use QUnit due to the fact that it is the clientside scripting library used to test JQuery. If it good enough for those people I guess it's as good as it gets - I didn't want to even try and dig up some arbitrary, halfbaked library when QUnit was such an obvious choice. I won't go into details about the "what" and "how" of QUnit - what it does is that it provides you with feedback on a webpage which exercises your tests written in Javascript - like this:

So - you write a test in a script and execute it in a browser and get a list of failing and completed tests. If everything is OK the top banner is green (left). If one or more tests fail the banner is red (right). This is controlled by CSS-class called "pass" and "fail". With that in mind and with a little knowledge of Watin I decided to just use write a unittest in Watin because it would allow me to write an test resembling the following:

[Test]

public void ExerciseQUnitTests()

{

using (var ie = new IE("http://(website)/UITest/QUnitTests.aspx"))

{

Assert.AreEqual("pass", ie.Element("banner").ClassName, "QUnittest(s) have failed");

}

}

...where QUnitTests.aspx should be the page exercising my QUnit tests. The test itself should check for a specific class in the top banner - if "fail" would be active one or more tests would have failed and the unittest should fail and cause a red build. There is at least one obvious gotcha to this approach: You only get to know that one or more clientside-tests have failed. You don't get to know which one and why it failed. Not very TDD'yish but it'll do for now.

Here's a list of the things I needed to do once I had my Watin-test written:

- I created a new project on my private CruiseControl.NET server which would download the sourcecode from my VS project, compile it using MSBuild and execute the unittests in my testproject. I battled for an hour or so with Watin because it needs to have ApartementState explicitly set. You won't have any problem when running your test in Visual Studio only but whenever you try to run the Watin test outside Visual Studio you get - well, funny errors basicly.

- I pointed the the website to the build output folder in Internet Information Server

- Then I ran into another problem - QUnit appearenly didn't seem to work very well with Internet Explorer 7 (or so I thought) - the website simply didn't output any testresults on my build server. It wasn't a 404 error or so - the page was just plain blank - so I had working tests on my laptop and failing tests on my buildserver. Without thinking much about it I upgraded to Internet Explorer 8 on my buildserver. Not much of deal so I did it - just to find out that the webpage still didn't output any results in IE 8 either. After a while of "What the f*** is going on" I started thinking again and vaguely remembered a similar problem I had about halft a year ago... The problem back then was that scripting in IE7 is disabled per default on Windows 2008 - which of course was the problem here as well. So I enabled scripting and finally got my ExerciseQUnitTests test to pass. Guess what happened when I forced a rebuild: GREEN LIGHTS EVERYWHERE. Yeeeehaaaaaaaa :o)

- Last, but not least I found out that CruiseControl.NET from now on will have to run in Console-mode in order to interact with the desktop in a timely fashion - because it needs to fire up a web browser - oh dear... I need to look into that one because I define having console apps running in a server environment as heavy technical debt you need to work your way around somehow. But again: It'll do for now even though I find it a little akward.

Conclusion: After a good nights work I got the A part solved - and another two nights I cranked the B part open as well. I'm now able to write QUnittests testing code in my custom JavaScript files, exercise the tests locally in a browser, check everything in and let a buildserver exercise the tests for me just like my client-code tests were first class citizens in TDD. Sweeeeeet.... The sourcecode is available for download here - please provide feedback to this solution and share experiences on the subject with me in the comments :o)

Links:

Continuous Integration article by Martin Fowler

QUnit - unit testrunner for the jQuery project

CruiseControl.NET - an opensource Continuous Integration server for Windows

Watin - web test automating tool for .NET